I’ve been using the Azure DevOps service for a few months now, primarily because Microsoft offer a free tier of hosted macOS clients, so we can build, sign and upload packages in an automated fashion, to our MDM service and even to Amazon Web Services. But how did I get here?

Last year I was fortunate enough to be able to attend the MacDevOps conference in Vancouver. My first trip to Canada. It’s a fantastic conference, and if you get the chance to attend I highly recommend doing so.

One of the sessions was by Jessica Deen - a lightning talk build anything in Visual Studio Team Services (now rebranded Azure DevOps)

I sat through it, smiled, nodded, didn’t get it, but tucked the knowledge away for the future. I dunno, I’m not an iOS developer, and don’t build Xcode projects all the time - so why do I need to build things in the cloud? But interesting nonetheless.

And then…

All of a sudden I find myself with a use case! Apple’s MDM specification only allows for signed packages to be deployed with MDM. We have a requirement to build and re-build our initial deployment package on a schedule. What we need is some kind of hosted build server… Amazon don’t have one, Google’s Cloud Platform doesn’t offer one, so what are the options?

CircleCI has a paid for hosted Mac offering… but wait… that talk… what about Microsoft?

There’s a free tier, so why not take Azure DevOps for a spin? Anything you learn can be mostly applied to another tool if required, right?

Pipelines PipeLines PipeLines

Now, I’m not an expert here. CI/CD and build pipelines are the mysterious tools of real developers (whoever they actually are) - not for folks like me right?

Well, perhaps I’m over simplifying - but really, a build pipeline is just a series of steps run in order to do things… I mean, doesn’t that sound like a batch or shell script?

I can write those… and indeed, most of my build steps are a series of bash commands, so if you can do scripting, you can do pipelines.

Azure pipelines are defined using yaml syntax and are fairly easy for humans to read to read and understand.

Can’t someone else do it?

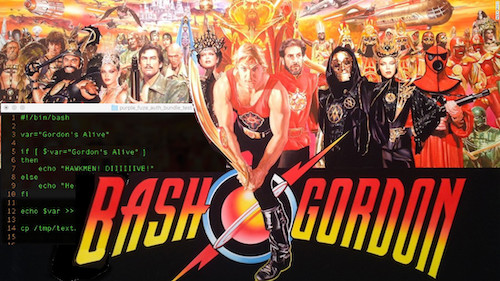

And even if defining a build pipeline is too far from your comfort zone, Microsoft have made it easier still. Azure has a GUI pipeline designer, where you can create your build steps using a fairly friendly interface.

Then, if you want to share what you’ve built, you can export that pipeline to yaml - store it in version control, and share it with the world… or at least the rest of your team.

Azure, really?

So, right now it feels like Amazon Web Services (AWS) is that 1000 pound Gorilla in the room. (or in the cloud?) It’s the one that most Tech folks use or are at least vaguely familiar with.

And it seems the all new Microsoft appreciate that. Azure DevOps has a really nice AWS integration - so now we can use Microsoft’s tooling to build our Mac packages and apps, then export them out to AWS S3 as part of our pipeline.

Neat right?

Can I see an example?

Certainly!

pool:

vmImage: Hosted macOS

steps:

- script: |

pip install awscli

displayName: 'Let''s get started - install AWS CLI'

- script: 'git clone https://github.com/munki/munki-pkg.git'

displayName: 'Clone MunkiPkg'

- script: './munki-pkg/munkipkg munki-pkg/TurnOffBluetooth/'

displayName: 'Build an example package'

- task: S3Upload@1

displayName: 'S3 Upload: look I can upload to Amazon :)'

inputs:

awsCredentials: 'AWS S3 ACCESS'

regionName: 'eu-west-2'

bucketName: 'steveq-azure-demo-bucket'

sourceFolder: 'munki-pkg/TurnOffBluetooth/Build/'

globExpressions: '*.pkg'

Which I stuck in github

AWS integration

Once the AWS tools are installed, you can go off to AWS IAM, create a key pair, with appropriate permissions, and add them to your Azure account. Note how I quickly gloss over how this is done…

(but at a basic level, I’ve created an API only user - with permissions to access AWS S3 - I can then create a service connection in Azure, that uses those credentials. Now my Azure pipelines have permission to create S3 objects.)

Now you have access to a number of AWS features and services. For example, see below for an example of generating an AWS pre-signed URL using the AWSShellScript command.

Gotchas?

Exporting variables between build steps is hard, or rather, took some figuring out. I did this:

- task: AWSShellScript@1

displayName: 'AWS Shell Script - Generate our pre-signed URL'

inputs:

awsCredentials: '$(awscreds)'

regionName: '$(aws_region)'

scriptType: inline

inlineScript: |

url=$(package_bucket)-$(stage)/bootstrap_$(stage).json

var=$(aws s3 presign s3://$url --expires-in 604800)

echo "This is a signed URL $var" #Just checking this works!

echo "##vso[task.setvariable variable=signed_url]$var"

#the above is Azure syntax to pass a variable to the next stage

Which works, but seems clunky. In my initial testing I tried to echo a variable in the SAME build step - and it didn’t work… but was available in subsequent build steps… It took longer than I’d like to admit to notice this…

Likewise - secure items… you can reference them in your YAML by UID, but it’s not clear (to me) from the docs where you’re supposed to find the UID of the item you’re referencing.

task: InstallAppleCertificate@2

displayName: 'Install an Apple certificate'

inputs:

certSecureFile: 'UID value of an uploaded secure item'

certPwd: '$(P12password)'

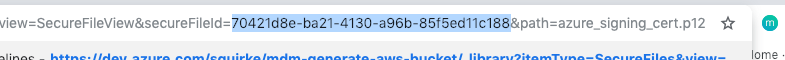

I figured it out in the end (select the item in the Web UI - and the UID can be found in the URL bar in your browser)

Like this:

What could you use this for?

There are all sorts of things, but off the top of my head - I’m planning on building a package signing service, so my colleagues can commit valid munkipkg code, and collect a signed package in an AWS S3 bucket at the other end.

Or - a lightweight Autopkg box, that can keep your AWS hosted Munki server in packages and patches without requiring a local Mac server.

I’m sure there are lots of other things you could do…

So I did a talk about this at London Apple Admins… here’s the video version of this post.